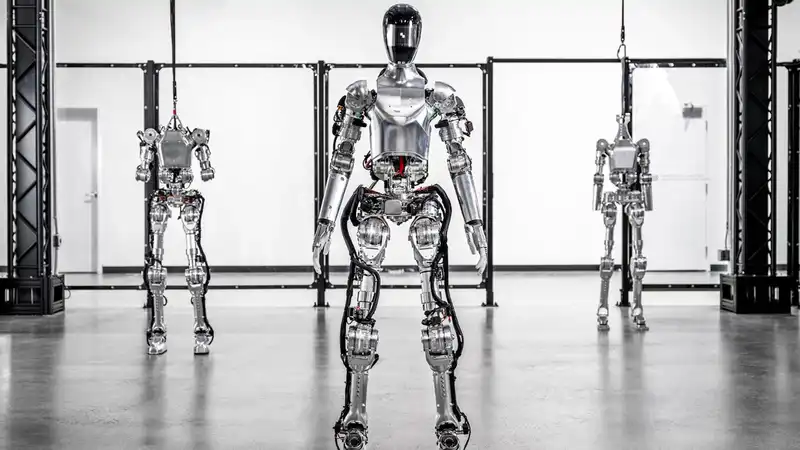

Microsoft and OpenAI are in talks to invest in Figure, a humanoid robotics startup

Bloomberg first reported the potential investment Depending on the amount raised, Figure could be worth more than $19 billion

The deal is not final and no one involved has confirmed it is happening It is a logical investment step for OpenAI, which has long explored the idea of robotics and large-scale language models

In a recent episode of Bill Gates' podcast, OpenAI CEO Sam Altman revealed that the company has already invested and will continue to invest in robotics companies

While not specifically implying that robots with ChatGPT in their brains are on the horizon, the concept of a large-scale language model like GPT-4 (which powers ChatGPT) as a control mechanism for robots is not new

We have already seen several research experiments where engineers have used GPT-4 to make robots pause in response to voice prompts, and Google is working on integrating its own large-scale language model technology into robots

Also seen at CES 2024 were toy robots that use GPT-4 to provide natural language responses, and devices like Catflap, which uses AI vision models like GPT-4V to spot mice

During his chat with Gates, Altman also revealed that the company once had a robotics division, but wanted to focus on the mind first He said: "We realized that we needed to master intelligence and cognition first before we could adapt to physical form"

If robots could think for themselves, Altman predicted, and if the hardware improved enough to take advantage of this mental capacity, "we could rapidly transform the job market, especially in the blue-collar sector"

Initial predictions for AI were that it would first take blue-collar jobs, automate repetitive tasks, and leave humans out in the cold

In reality, the opposite seems to be happening, with Altman explaining that 10 years ago everyone thought it would be blue-collar jobs first, then white-collar jobs, with no impact on creative jobs

"Obviously, it's going in the exact opposite direction," he told Gates 'If you're going to have a robot move heavy machinery, it has to be really precise I think this means we have to follow where the technology goes"Altman added that he has seen OpenAI models being used by hardware companies to "do amazing things with robots" using their language understanding

While it would be nice to order a robot helper that can do the housework, cook dinner, and even take care of the kids, it will be some time before the machines reach that level, and the underlying AI models are only temporary [Dr Richard French, a senior systems scientist at the University of Sheffield, said that while the underlying AI model can provide quasi-useful human-robot interaction, the need to untangle the parts of the model not needed for that robot could make things difficult told Tom's Guide

The type of AI behind ChatGPT "can speed up general programming and recognition as well as provide quasi-useful human-robot interaction (HMI)," he explained

"This is like natural language processing, vision, text-to-image generation

The downside is that the underlying AI model can be a blunt instrument, providing more than necessary for the task assigned to the robot

"From a robot-building perspective," he says, "it's a lot of fun to undo foundation AI when you realize that the robot is a very specific use case and you've either overtrained or undertrained its mode

Dr Green predicts that human-level robotics, as predicted by Sam Altman, will require the development of neuromorphic computing, computers modeled after the human brain system

Dr Robert Johns, an engineer and data analyst at Hackr, agrees that the underlying model will improve robot perception, decision-making, and other abilities

"However, robots need very specialized knowledge to perform certain tasks Just as a robot that brews coffee requires different skills than a robot that vacuums" For this reason, a dedicated basic model tailored to a specific robotic application is more likely to work well than a generalized one such as the GPT-4"

In other words, while AI is growing rapidly, while the promise of artificial intelligence is imminent, while robots are starting to walk, talk, and dance, it may take a little longer for them to be able to do all of the above on their own and with some autonomy

Comments