Google researchers have been working overtime of late, releasing new models and ideas left and right The latest is a way to turn a static image into a manipulatable avatar, born out of an AI agent playing a game

VLOGGER is not currently available for trial, but from the demo, it looks amazingly realistic, with avatars that can be created and controlled by voice

Tools like Pika Labs' Lip Sync, Hey Gen's video translation service, and Synthesia can already do something somewhat similar, but this seems like a simpler, lower bandwidth option

Currently VLOGGER is just a research project with some fun demo videos, but if commercialized it could be a new way to communicate on Teams or Slack

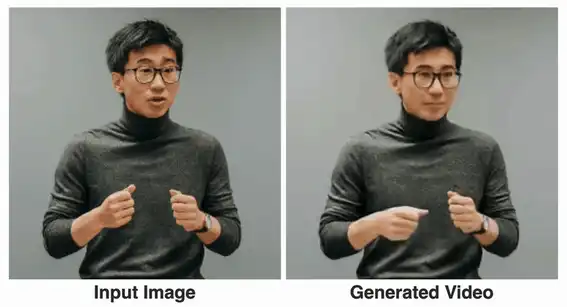

This is an AI model that can create animated avatars from still images and maintain the photorealistic appearance of the person in the photo in every frame of the final video

The model also takes an audio file of a person speaking and processes the body and lip movements to reflect the natural movements of the person as he or she speaks

This includes not only head movements, facial expressions, gaze, and blinking, but also hand gestures and upper body movements, which are created without reference to anything other than image and audio

The model is built on a diffusion architecture that powers text-to-image, video, and even 3D models such as MidJourney and Runway, but with additional control mechanisms added

Vlogger takes multiple steps to obtain a generated avatar First, it takes audio and images as input, passes them through a 3D motion generation process, then uses a "time diffusion" model to determine timing and motion, and finally upscales them into the final output

Basically, a neural network is built to predict the motion of the face, body, pose, gaze, and facial expressions over time, using the still image as the first frame and the audio as a guide

Training the model requires a large multimedia dataset called MENTOR This dataset contains 800,000 videos of various people conversing, with faces and body parts labeled moment by moment

This is a research preview, not an actual product, and while it can generate realistic motion, the videos do not necessarily match the movements of real people The core is still a diffusion model, which is prone to anomalous behavior

The team states that it also struggles with particularly large movements and diverse environments Also, it can only handle relatively short videos

According to Google researchers, one of the main use cases is video translation For example, an existing video in a particular language is filmed, and the lips and face are edited to match the new translated audio

Other potential use cases include creating animated avatars for virtual assistants and chatbots, and creating virtual characters that look and move realistically in a gaming environment

Tools that do something similar to this already exist, including Synthesia, which allows users to walk into a company office and create their own virtual avatar to make a presentation, but this new model seems to make the process much easier

One potential use is to provide low-bandwidth video communications Future versions of this model may allow for voice to video chat by animating static avatars [This could prove particularly useful in VR environments on headsets such as Meta Quest or Apple Vision Pro, as it works independently of the platform's own avatar model

Comments